Difference between revisions of "Petting Zoo"

m (1 revision: Complexity zoo import.) |

|||

| (4 intermediate revisions by 4 users not shown) | |||

| Line 15: | Line 15: | ||

==== The States ==== | ==== The States ==== | ||

| − | A Turing machine also has a finite set of states <math>Q</math> and a transition function <math>\delta:Q\times\Gamma\to Q\times\Gamma\times\{\leftarrow,\rightarrow\}</math>, which tells the machine what to do when it is reading a given symbol and is in a given state. Specifically, <math>\delta(q,\gamma) = ( | + | A Turing machine also has a finite set of states <math>Q</math> and a transition function <math>\delta:Q\times\Gamma\to Q\times\Gamma\times\{\leftarrow,\rightarrow\}</math>, which tells the machine what to do when it is reading a given symbol and is in a given state. Specifically, <math>\delta(q,\gamma) = (q', \gamma', d)</math> means that if the Turing machine is in state <math>q</math> and is reading a symbol <math>\gamma</math>, then it should write a <math>\gamma'</math> onto the tape, enter the new state <math>q'</math> and move the head in direction <math>d</math>. |

The set of states includes three special states: the start state <math>q_0</math> in which the machine starts, an accept state <math>q_{\mbox{accept}}</math> and a reject state <math>q_{\mbox{reject}}</math>. Whenever computation reaches the accept state, the machine halts and accepts the input string and when computation reaches the reject state, the machine halts and rejects the input string. | The set of states includes three special states: the start state <math>q_0</math> in which the machine starts, an accept state <math>q_{\mbox{accept}}</math> and a reject state <math>q_{\mbox{reject}}</math>. Whenever computation reaches the accept state, the machine halts and accepts the input string and when computation reaches the reject state, the machine halts and rejects the input string. | ||

| Line 43: | Line 43: | ||

This model of computation is very powerful. In fact, any problem decidable by a real-world computer is decidable by a Turing machine as well. In particular, the Turing machine model has the remarkable property that there exists a ''universal'' Turing machine that can simulate any other Turing machine given its description. Showing this is beyond the scope of the Petting Zoo, but it means that we show the power of the Turing machine model by showing that there exists a Turing machine that simulates other models of computation. To that ends, there exist ''reductions'' from any other model of computation that is generally to be "reasonable" (that is, physically realizable) to a simulation on a Turing machine. | This model of computation is very powerful. In fact, any problem decidable by a real-world computer is decidable by a Turing machine as well. In particular, the Turing machine model has the remarkable property that there exists a ''universal'' Turing machine that can simulate any other Turing machine given its description. Showing this is beyond the scope of the Petting Zoo, but it means that we show the power of the Turing machine model by showing that there exists a Turing machine that simulates other models of computation. To that ends, there exist ''reductions'' from any other model of computation that is generally to be "reasonable" (that is, physically realizable) to a simulation on a Turing machine. | ||

| − | We can thus be completely general and deal with the Turing machine only. This gives us a lot of advantages, such as the ''locality'' of a Turing machine's operation. Note that the function <math>\delta</math> only considers one symbol of the input at a time, and can only reach symbols adjacent to the tape head. This allows us to more easily prove some nice theorems, such as the | + | We can thus be completely general and deal with the Turing machine only. This gives us a lot of advantages, such as the ''locality'' of a Turing machine's operation. Note that the function <math>\delta</math> only considers one symbol of the input at a time, and can only reach symbols adjacent to the tape head. This allows us to more easily prove some nice theorems, such as the [[Complexity_Dojo/Cook-Levin_Theorem|Cook-Levin Theorem]]. |

=== Non-deterministic Turing Machine === | === Non-deterministic Turing Machine === | ||

| Line 84: | Line 84: | ||

==== {{zcls|n|npc|NP-complete}} ==== | ==== {{zcls|n|npc|NP-complete}} ==== | ||

| − | By the [[ | + | By the [[Complexity_Dojo/Cook-Levin_Theorem|Cook-Levin Theorem]], any problem in NP is reducible in polynomial time to a problem called CircuitSAT. Thus, if a fast algorithm is ever invented for CircuitSAT, then it will have been established that every problem in NP has a fast algorithm, and thus that P = NP. We thus say that CircuitSAT is '''complete''' for NP. |

Similarly, if we can reduce CircuitSAT to some other NP problem in polynomial time, then a fast solution to the new problem would imply that we could solve CircuitSAT quickly, and in turn solve any NP problem quickly. Thus, we also call any such problem complete. The class {{zcls|n|npc|NP-complete}} is precisely the set of problems ''X'' in NP such that every other NP problem reduces to ''X''. We define '''coNP-complete''' in the same manner. | Similarly, if we can reduce CircuitSAT to some other NP problem in polynomial time, then a fast solution to the new problem would imply that we could solve CircuitSAT quickly, and in turn solve any NP problem quickly. Thus, we also call any such problem complete. The class {{zcls|n|npc|NP-complete}} is precisely the set of problems ''X'' in NP such that every other NP problem reduces to ''X''. We define '''coNP-complete''' in the same manner. | ||

| Line 188: | Line 188: | ||

== What Next? == | == What Next? == | ||

OK, so now you've read about some of the basics of complexity theory, including some essential terminology, the basic models of computation, and some of the more important complexity classes. What now? If you're still reading, then you're most likely interested in going further, so here's some ideas: | OK, so now you've read about some of the basics of complexity theory, including some essential terminology, the basic models of computation, and some of the more important complexity classes. What now? If you're still reading, then you're most likely interested in going further, so here's some ideas: | ||

| − | * Read the resources that the [ | + | * Read the resources that the [http://www.scottaaronson.com/ Zookeeper] mentions in the [[Zoo Intro#Recommended Further Reading|Introductory Essay]]. |

| − | * Go check out the new [[ | + | * Go check out the new [[Complexity Dojo]] to learn about some important theorems. |

* Browse randomly around the main [[Complexity Zoo|Zoo]]. | * Browse randomly around the main [[Complexity Zoo|Zoo]]. | ||

* Check out some of the problems in the [[Complexity Garden]]. | * Check out some of the problems in the [[Complexity Garden]]. | ||

Latest revision as of 19:15, 18 February 2020

Under construction! Once finished, the Petting Zoo will introduce complexity theory to newcomers unready for the terrifying and complex beasts lurking in the main zoo. It will be a gentler source of information about major complexity classes, problems, and reductions. It is meant to be self-contained and does not require previous knowledge of complexity theory, aside from some basic terminology that can be found in the glossary. In particular, one should be familiar with the terms alphabet, word and language.

Modeling Computation

In order to discuss computational complexity, we must first agree on a way of talking about computation. Specifically, we want to talk about computation in a neutral way; we want some way of talking about computation that is general enough to apply to whatever computer you may want to use, be it a wristwatch or a supercomputing cluster. The model that we shall use to do so is that of the Turing machine, named after its inventor Alan Turing.

Deterministic Turing Machine

A Turing machine is an abstract model of a computer that has an internal state, an infinite length tape, for scratch work when computing, and a read/write head that can move left or right to any point on the tape. For the purposes of our discussion, we shall assume that whatever problem our Turing machine is designed to solve is a language over some alphabet .

The Tape

The tape is essentially an infinitely long string over some alphabet , called the tape alphabet. The tape alphabet has to at least contain every symbol of , but may also contain some additional symbols useful to an algorithm. In addition, the tape alphabet contains a special "blank" symbol (written by different authors as , or ; we shall use here). Some authors require more special symbols to be in , but for our purposes, we won't worry with any such additional symbols.

The States

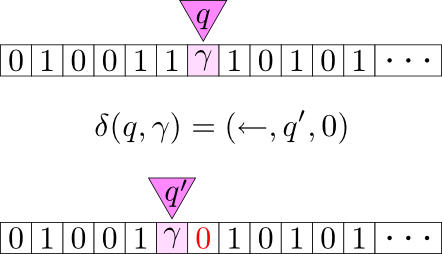

A Turing machine also has a finite set of states and a transition function , which tells the machine what to do when it is reading a given symbol and is in a given state. Specifically, means that if the Turing machine is in state and is reading a symbol , then it should write a onto the tape, enter the new state and move the head in direction .

The set of states includes three special states: the start state in which the machine starts, an accept state and a reject state . Whenever computation reaches the accept state, the machine halts and accepts the input string and when computation reaches the reject state, the machine halts and rejects the input string.

You may think of the set of states and the transition function as completely describing the behavior of a Turing machine. Indeed, to describe a specific Turing machine to someone, it is sufficient to give them a tuple .

Computing and Deciding Problems

Each Turing machine decides a decision problem , accepting some input (that is, a string over the alphabet ) if and only if . The machine is given its input as the initial state of its tape: we assume that the tape originally contains the string padded by an infinite number of blanks. Since the blank symbol is not in , the machine "knows" where the ends of the input are.

It proceeds to apply the transition function again and again, stopping if it reaches either or .

Of course, a Turing machine need not actually reach either halting state, as it could loop forever. Also, note that we only require that a Turing machine halt if . If the answer to a problem is "no," the machine is allowed to loop.

It is sometimes convenient to discuss a Turing machine as a function such that:

Thus, using this notation, we can say that a Turing machine decides a decision problem if, given any input , if and only if . We say that any decision problem which admits such a machine is decidable.

Since decision problems and languages are different characterizations of the same concept, we also say that a Turing machine recognizes a language if, for any input , if and only if . We say that a language is decidable if there exists a Turing machine deciding the language.

Power of a Turing Machine

This model of computation is very powerful. In fact, any problem decidable by a real-world computer is decidable by a Turing machine as well. In particular, the Turing machine model has the remarkable property that there exists a universal Turing machine that can simulate any other Turing machine given its description. Showing this is beyond the scope of the Petting Zoo, but it means that we show the power of the Turing machine model by showing that there exists a Turing machine that simulates other models of computation. To that ends, there exist reductions from any other model of computation that is generally to be "reasonable" (that is, physically realizable) to a simulation on a Turing machine.

We can thus be completely general and deal with the Turing machine only. This gives us a lot of advantages, such as the locality of a Turing machine's operation. Note that the function only considers one symbol of the input at a time, and can only reach symbols adjacent to the tape head. This allows us to more easily prove some nice theorems, such as the Cook-Levin Theorem.

Non-deterministic Turing Machine

What we just described is a deterministic turing machine. This is because given any current state and a character of the input word, the turing machine can move to one and only one possible state.

However, in the same situation, a non-deterministic turing machine could have zero, one, or more than one possible states it can choose to move to. This means that on any given word w, a non-deterministic Turing machine has many different computational paths to choose from. If any of these paths leads to the accept state (even if some of the other paths leads to the reject state) the Turing machine accepts the word. The non-deterministic Turing machine does not have to check all the possible computational paths because, if there exists an accepting computation path for a word, the non-deterministic Turing machine will "guess" correctly on each character of the input word which branch to follow in order to reach the accepting state.

This feature of the non-deternimistic Turing machine gives it the ability to compute faster than (or at least as fast as) a deterministic Turing machine. However, this does not give the non-deterministic Turing machine any computational advantage over the deterministic Turing machine because for every non-deterministic Turing machine, there exists an equivalent deterministic Turing machine which recognizes the same language that the non-deterministic Turing machine recognizes. A deterministic turing machine is a special case of a non-deterministic Turing machine. This means that trivially, there is a non-deterministic Turing machine for every deterministic Turing machine (i.e., the same one).

Most Important Classes

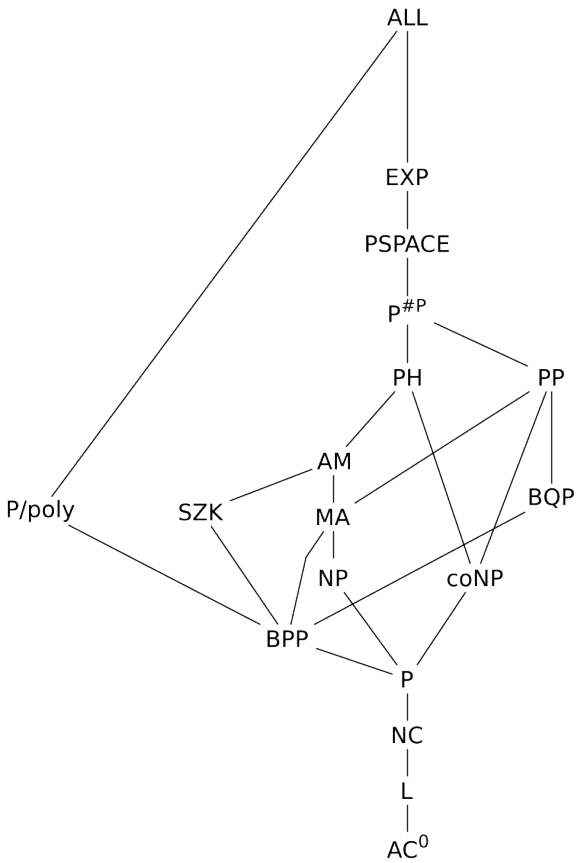

A complexity class is a set of languages that share some property. For the most part, the shared property is described in terms of the amount of some resource, such as time or memory, needed by a Turing machine recognizing a language.

Here, we describe some of the most important complexity classes, where "important" is to be understood in terms of what classes get referenced the most, which classes quintessentially describe the character of other classes and which classes are the most fundamental to learning about complexity theory. Where applicable, we also provide links to problems which somehow capture the character of a class, or otherwise provide good examples of what the problems in a class look like.

P

P is where it all starts. Informally, P is the class of problems which we can solve efficiently using a conventional algorithm. More precisely, that P is the class of all problems solvable by a deterministic Turing machine bounded in time by a polynomial function of the input length. Thus, problems in P scale well to large instances.

Note that even though P does not preclude algorithms bounded by polynomials with unreasonably large degrees, such as , in practice it has often turned out that whenever such an algorithm is discovered, it is later improved to an algorithm with a more reasonable degree. As such, the division between problems in P and the rest of the complexity landscape has turned out to be somewhat "natural," in that it describes a boundary with clear practical implications. It is for this reason that we have such an interest in P, and justify calling problems in P tractable.

Notable problems in P:

- Graph reachability

- 2SAT

- Matrix multiplication

NP and coNP

NP is, informally, the set of problems such that someone can convince you of a yes answer in a reasonable amount of time. That is, there must be a short proof (often called a witness) for each string in an NP language, as well as an algorithm in P that verifies the proofs.

The central open problem in complexity theory is whether the existence of such witnesses implies a fast algorithm to solve the problem; that is, whether P = NP. It is widely conjectured that P ≠ NP, but a proof of that conjecture remains frustratingly elusive.

Notice that we make no statement about needing to be able to prove that a string isn't in an NP language. More precisely, if we have a Boolean function in NP, then it is not necessary that the complement of , is in NP. Thus, unlike P, NP is not known to be closed under complement. Because of this, we also discuss the class coNP of problems for which we can always construct proofs of "no" instances.

Notable problems in NP:

NP-complete

By the Cook-Levin Theorem, any problem in NP is reducible in polynomial time to a problem called CircuitSAT. Thus, if a fast algorithm is ever invented for CircuitSAT, then it will have been established that every problem in NP has a fast algorithm, and thus that P = NP. We thus say that CircuitSAT is complete for NP.

Similarly, if we can reduce CircuitSAT to some other NP problem in polynomial time, then a fast solution to the new problem would imply that we could solve CircuitSAT quickly, and in turn solve any NP problem quickly. Thus, we also call any such problem complete. The class NP-complete is precisely the set of problems X in NP such that every other NP problem reduces to X. We define coNP-complete in the same manner.

Notable NP-complete problems:

- Travelling Salesman Problem (TSP D)

- Satisfiability (SAT)

Notable coNP-complete problems:

PH

The class PH is an example of a hierarchy; that is, a class which is the union of a set of recursively defined classes. The precise details of the construction of PH are given in its full Zoo entry, but informally, PH is built up by considering P, P with an "oracle" for solving NP and coNP problems, P with an oracle for NP and coNP with an oracle for NP and coNP, and so on.

Under various implausible hypotheses, we can prove that PH "collapses" down to a finite "stack" of oracles. For example, if NP = coNP, then PH collapses to NP. Often, the fact that if a statement X is true then PH collapses to a finite level, is taken as evidence against X.

PSPACE

Whereas P is a class of problems that can be solved in a polynomially-bounded amount of time, PSPACE is the class of problems that can be solved by a deterministic Turing machine that uses only a polynomially-bounded amount of space, regardless of how long the computation takes. Perhaps unsurprisingly, restricting by space instead of time allows for a lot more power. For instance, it is trivial to see that PSPACE contains every problem in NP: simply iterate through all possible proof strings (which have to be polynomially short) and check each one in turn. If any proof string works, then the answer to the problem is "yes." Otherwise, you know that the answer must be "no," since otherwise you would have found a valid proof string. Similar arguments show that PSPACE is a very powerful class indeed, containing the entire polynomial hierarchy, along with PP and P#P.

Notable problems in PSPACE-complete:

- QBF, also known as QSAT.

- Deciding the winners of many kinds of games, including Geography and Go.

EXP

Like P, EXP is a class of problems solvable by deterministic Turing machines subject to some time bounds. Whereas P machines are bounded by polynomial time, the running time of an EXP machine may take time on the order in , where .

EXP contains nearly every other class that we regularly concern ourselves with, including PSPACE and the polynomial hierarchy. Of course, we can always construct classes of still harder problems, such as NEXP and EEXP, but from a simplistic point of view, EXP is "big enough" to contain most problems we ever hope to attack.

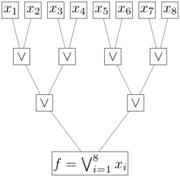

AC0

AC0 is one of the smallest interesting complexity classes, as well as one of the few for which unconditional lower bounds are known. Formally, AC0 consists of all problems that are solvable using polynomial-size, constant-depth, unbounded-fanin circuits of AND, OR, and NOT gates. Recall that the depth of a Boolean circuit is the length of the longest path from input to output, and in some sense measures the "time" needed for the circuit to compute a function (ignoring wire delays). Thus, every AC0 function is computable in "constant time," albeit with massive amounts of parallelism. The fanin is the maximum number of input wires to any gate. If both the fanin and the depth were bounded, then we'd get the even tinier class NC0, consisting of problems for which every bit of the output depends on only O(1) bits of the input. AC0, by contrast, contains functions such as AND and OR, which depend in an interesting way on all n of the input bits. On the other hand, fundamental results from the 1980's established that the PARITY and MAJORITY functions cannot be expressed (or even well approximated) in AC0. Later these results were turned around, to show that every function in AC0 is in some sense efficiently learnable. These results are often considered among the crowning achievements of the field. Loosely speaking, the ultimate goal of complexity theory is to understand classes like P and NP as well as we understand AC0.

NC

NC is, intuitively, the class of problems in P that are solvable by very fast parallel algorithms. More formally, NC is the class of problems that are solvable by uniformly-generated Boolean circuits with polynomially many gates and polylogarithmically-bounded depth.

L

As with PSPACE, L is a class based upon space limitations, rather than the time limitations in the definitions of P and EXP. In particular, L is the set of problems solvable by a deterministic Turing machine which uses no more than a logarithmic amount of extra memory (i.e., memory besides that needed to write down the input itself). This class has important practical implications, as we often want algorithms that run using only modest amounts of space.

It is easy to see that L is contained in P, since a deterministic Turing machine subject to the logarithmic-space restriction cannot access more than a polynomially-bounded number of states without repeating. Thus, no language in L can take more than polynomial time to decide.

P/poly

P/poly is an example of a complexity class based on the notion of advice. In particular, P/poly is the set of languages decidable by P machines given advice strings whose lengths are bounded by a polynomial in the input length.

Advice strings are strings that depend only on the length of the input, not on the input itself, but can otherwise be chosen arbitrarily to help the algorithm. It is not immediately obvious that advice is useful. Note, however, that if we allowed a polynomial-time algorithm access to an exponentially-long advice string (call such a class P/exp), then we could decide any language whatsoever, by simply giving a giant lookup table as advice.

Such clear results are much harder to find for the more modest P/poly. However, one can certainly say that P is contained in P/poly. This implies that one could show P ≠ NP by showing that NP is not in P/poly.

BPP

Intuitively, BPP is the class of problems that are solvable efficiently by randomized algorithms. Formally, BPP is defined as the class of languages which can be solved in polynomial time by a P machine with access to a fair coin, with the constraint that the algorithm's error rate is bounded. By that, we mean that a BPP machine given a "yes" instance must return "yes" at least 2/3 of the time, and a BPP machine given a "no" instance must return "no" more than 1/3 of the time. The choices of constants in the definition are arbitrary, as we can amplify the accuracy of a BPP algorithm by repeating it several times and taking a majority vote, provided that the error rate is bounded by some constants.

For a while, the most striking application of algorithmic randomness was to primality testing. In 2002, however, the AKS primality test (named in honor of its inventors) was developed, which deterministically tests the primality of an integer in polynomial time. This is a key example of derandomization.

Based on mounting evidence, most complexity theorists conjecture today that P = BPP. Along with P versus NP, this is one of the central open problems of the field.

MA

MA is the class of problems solvable by Merlin-Arthur games, which involve two players named Merlin and Arthur. Given an instance of some problem, Merlin, who possesses unbounded computational resources but is not necessarily trustworthy, sends Arthur a polynomially-sized proof that the answer is "yes." For his part, Arthur has the power of a BPP machine, and must verify the proof. We require that if the answer really is "yes," then there exists a valid proof causing Arthur to accept with probability at least 2/3, while if the answer is "no," then no purported proof causes Arthur to accept with probability greater than 1/3.

In this way, MA acts like a randomized generalization of NP. If Arthur did not use any of the random bits he has access to, then it is easy to see that this construction would be an elaborate way of describing NP. Thus, .

MA is also a good example of a class based on a communication protocol between several "players." Other examples include SZK and AM.

AM

AM is the class of problems solvable by Arthur-Merlin games. Such games are similar to Merlin-Arthur games, but with the important difference that Arthur gets to ask Merlin a question. In particular, Arthur generates a challenge string based on a problem instance as well as a supply of random bits, and sends this challenge to Merlin. Next, Merlin sends Arthur a response. Finally, Arthur decides whether to accept or not based on Merlin's response and the problem instance. Like with MA and BPP, we require that the error probability be bounded so that gap amplification can be performed efficiently.

In this way, AM is a generalization of SZK in which we allow for the possibility of Arthur learning details from the protocol other than whether a given instance is a "yes" instance or a "no" instance. AM also generalizes MA, since Arthur can simply send Merlin an empty string for his challenge. Strict inclusion proofs, however, are as elusive for AM as for pretty much all other complexity classes.

SZK

SZK (Statistical Zero Knowledge) is the class of problems for which a "yes" answer can be verified by a statistical zero-knowledge proof protocol. These protocols involve a notion of "proof" that is somewhat different from the traditional one, but is of crucial importance (for example) in cryptography. Essentially, these protocols consist of a BPP machine (the verifier) as well as a prover with unbounded computational power, who needs to convince the verifier that a statement is true without revealing anything about why it's true. (In particular, the verifier should not gain the ability to convince anyone else of the statement in question.) For more details, please visit the full Zoo entry for SZK.

Notable problems in SZK:

BQP

BQP, informally, is the class of all problems that admit an efficient solution by quantum computers. Since quantum computers are inherently probabilistic, BQP is a closer analogue to BPP than to P. Formally, BQP is the class of languages decidable by a uniform family of quantum circuits (that is, such that each circuit is produced by a P machine) involving no more than a polynomial number of gates drawn from a specified set of universal quantum gates (such as the Toffoli and Hadamard gates, or the CNOT and π/8 gates), subject to the same bounded-error constraints as BPP (we conventionally require a success probability of 2/3).

If that definition seems overly involved, it's because for many questions relating to quantum computing, the quantum circuit model turns out to be much more natural than a quantum generalization of the Turing machine, and so we have to deal with the issues of determining universal sets of gates and enforcing uniformity.

Note that one can just multiply all of the gates in a quantum circuit together into a single giant gate, but that this is unphysical, since real-world interactions are local. It is for this reason that we require BQP circuits be built up from a universal set of gates, which act locally on small numbers of qubits.

Similarly, if we did not require uniformity, we could decide even undecidable languages. For example, let be any undecidable language, and consider the language . Then we can build a non-uniform circuit family that solves , by simply having the circuit for size n accept if and only if . Thus, we have essentially "offloaded" all the processing for onto the process of constructing the circuits. Uniformity prevents this obviously ridiculous situation.

Given the present state of complexity theory, we cannot hope for an unconditional proof that BQP is strictly larger than P or BPP. However, Shor's famous result that the factoring and discrete logarithm problems are in BQP provides evidence that BPP≠BQP.

#P

In a departure from the other classes we've considered here, #P is a class not of languages, but of function problems. Intuitively, #P problems involve counting the number of solutions of an NP problem. More precisely, the members of #P are functions , where is the number of accepting paths of an NP machine.

One reason for studying this class is that it can be used as an oracle for classes of languages. In particular, if we give a P machine the ability to pose questions to a #P oracle, then we get a new class of problems which we write P#P. Giving access to such an oracle winds up giving us a lot of power: P#P contains the entire polynomial hierarchy!

As with NP, #P also has complete problems, which we call #P-complete. Notable problems in #P-complete:

PP

Like BPP, PP is a class defined in an attempt to find out what randomness allows us to do algorithmically. Formally, PP is the class of problems solvable by an NP machine such that, given a "yes" instance, strictly more than 1/2 of the computation paths accept, while given a "no" instance, strictly less than 1/2 of the computation paths accept.

The difference between PP and BPP, then, is that BPP requires there to be a noticeable gap (traditionally 1/3) between the "yes" and "no" acceptance probabilities, while PP only requires that there be some nonzero gap, and makes no requirement as to how large the gap is. Thus, the gap might get smaller with larger problem instances at an exponential rate, in which case we would need need to repeat the algorithm exponentially many times to attain a noticeable gap. Thus, BPP is the more "reasonable" model, as we can differentiate between "yes" and "no" instances using few iterations.

By relaxing the error-bounding requirement, we introduce much more power. Indeed, PP contains both NP and BQP.

What Next?

OK, so now you've read about some of the basics of complexity theory, including some essential terminology, the basic models of computation, and some of the more important complexity classes. What now? If you're still reading, then you're most likely interested in going further, so here's some ideas:

- Read the resources that the Zookeeper mentions in the Introductory Essay.

- Go check out the new Complexity Dojo to learn about some important theorems.

- Browse randomly around the main Zoo.

- Check out some of the problems in the Complexity Garden.

- Take a class on complexity theory, formal languages or algorithms from your local institution of higher learning.